Exploring CNNs and RNNs: Key Architectures in Deep Learning

In the fascinating world of Deep Learning, we often hear about AI that can “see” and “understand” the world in ways that mimic human intelligence. From facial recognition on your smartphone to the voice assistant that understands your commands, these incredible feats are powered by specialized neural network architectures. While there are many types of networks, two stand out as the foundational pillars for a huge number of modern AI applications: Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs).

Understanding the difference between these two architectures is key to understanding how AI solves different kinds of problems. They are not competing technologies; rather, they are specialized tools designed to excel at processing specific types of data. CNNs are the “eyes” of AI, while RNNs are the “ears” and “memory.”

Convolutional Neural Networks (CNNs): The Masters of Visual Data

Imagine trying to teach a computer to recognize a cat in a photograph. For a human, this is effortless. But for a computer, a picture is just a grid of pixels. How can it learn to identify the features—the whiskers, the pointy ears, the eyes—that make up a cat, regardless of its position, size, or lighting in the image?

This is the problem that Convolutional Neural Networks (CNNs) were born to solve.

How they work:

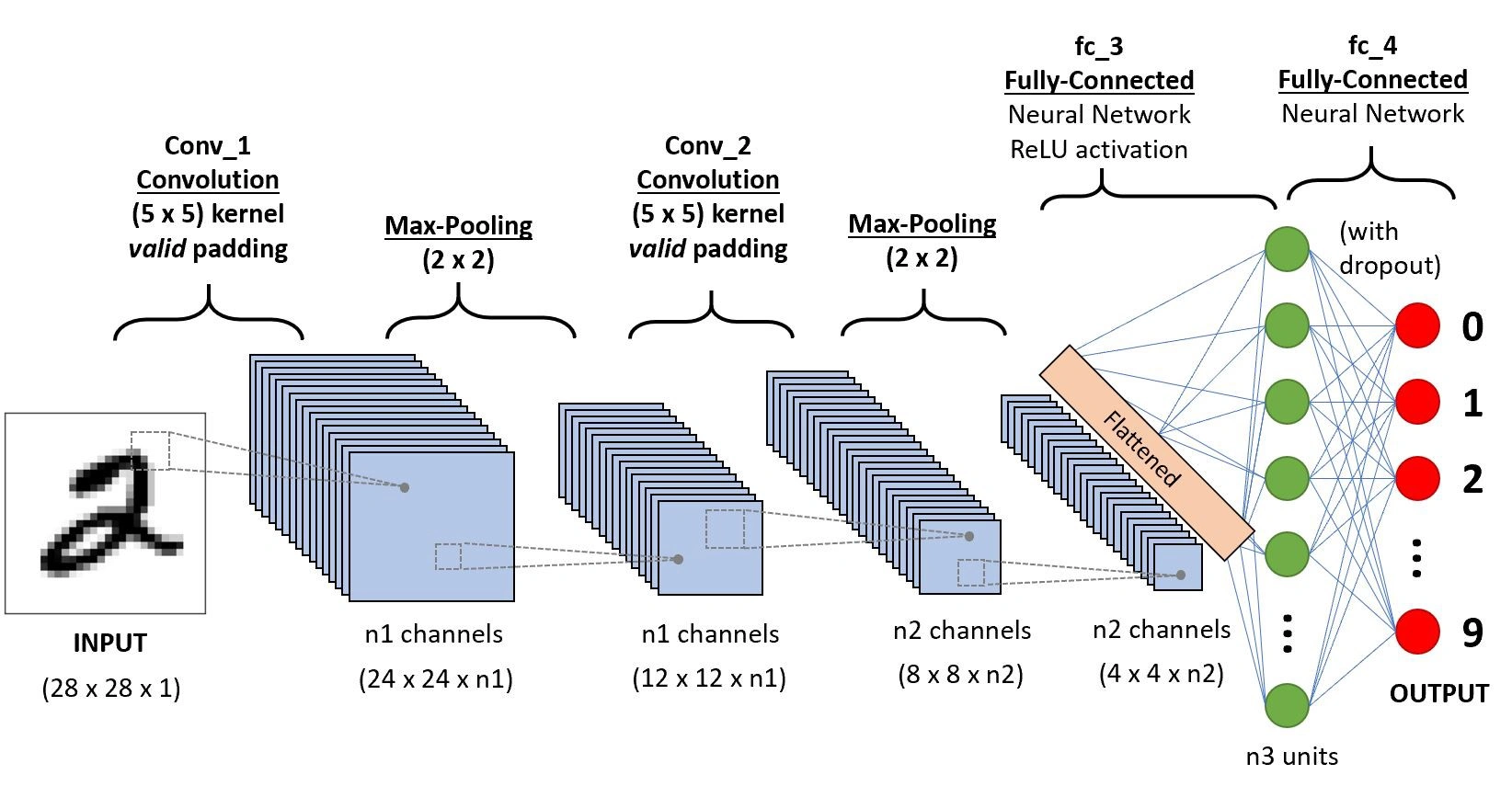

The magic of a CNN lies in a special mathematical operation called a convolution. Instead of looking at every pixel at once, a CNN scans an image with a small filter (or “kernel”). This filter is designed to detect a specific, simple feature, like an edge, a corner, or a patch of color.

The network has many of these filters, each looking for a different feature. As the image passes through the layers of the network, these simple features are combined into more complex ones.

- Layer 1: Detects simple edges and curves.

- Layer 2: Combines edges to detect shapes like eyes or noses.

- Layer 3: Combines shapes to detect facial features.

- Final Layer: Combines the features to confidently say, “This is a cat.”

This hierarchical approach makes CNNs incredibly efficient and effective at understanding spatial data, where the arrangement of the parts is crucial to understanding the whole.

Where CNNs are used:

- Image Classification and Facial Recognition: The classic use case.

- Object Detection in Self-Driving Cars: Identifying pedestrians, other cars, and traffic signs.

- Medical Image Analysis: Detecting tumors in MRIs or signs of disease in X-rays.

- Document Analysis: Reading and classifying scanned documents.

The development of these powerful architectures is a major focus of advanced research. For those looking to delve into the cutting edge of this field, a program like the deep learning IISc (referring to courses from premier institutions like the Indian Institute of Science) offers a deep, research-driven dive into the theory and application of these complex networks.

Recurrent Neural Networks (RNNs): The Specialists in Sequential Data

Now, imagine a different kind of problem. How do you teach a computer to understand a sentence? The meaning of a sentence depends on the order of the words. “The dog chased the cat” means something completely different from “The cat chased the dog.” The data is sequential, and context from previous elements is crucial.

This is where Recurrent Neural Networks (RNNs) shine.

How they work:

The key feature of an RNN is its “loop.” When an RNN processes an element in a sequence (like a word in a sentence), it doesn’t just produce an output; it also “remembers” a summary of the information it has seen so far and passes this memory along to the next step.

This recurrent, looping mechanism allows the network to maintain a “context” or “state” as it moves through the sequence. It understands that the word “it” in the sentence “The cat was tired, so it slept” refers to the cat, because it remembers seeing “cat” earlier in the sequence.

Where RNNs are used:

- Natural Language Processing (NLP): Machine translation, sentiment analysis, and text generation.

- Speech Recognition: Converting spoken audio (a sequence of soundwaves) into text.

- Time Series Analysis: Predicting stock prices or weather patterns based on historical data.

- Music Generation: Creating new melodies based on the patterns of existing music.

The Evolution: LSTMs, GRUs, and Transformers

While classic RNNs are powerful, they can struggle with “long-term memory”—remembering context from very early in a long sequence. To solve this, more advanced versions were created, such as Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs). These have special mechanisms called “gates” that allow them to be much better at learning and remembering long-range dependencies.

More recently, the Transformer architecture (which uses a mechanism called “self-attention”) has revolutionized the field of sequential data, largely replacing RNNs/LSTMs for many state-of-the-art NLP tasks, including powering models like GPT.

Learning the Architectures: Your Path to Building Intelligent Systems

Understanding the fundamental differences between CNNs and RNNs is the first step toward becoming a proficient deep learning practitioner. You learn to see a problem and instantly know which architectural tool is the right one for the job.

For anyone looking to build these powerful systems, a structured and comprehensive deep learning course is essential. Such a course will provide not just the theoretical understanding of these architectures but also the hands-on coding experience needed to build, train, and deploy them to solve real-world problems.

Conclusion: The Right Tool for the Right Job

CNNs and RNNs are the workhorses of the deep learning world. They represent two different but equally brilliant ways of designing neural networks to understand the structure of data. CNNs excel at understanding spatial hierarchies in data like images, while RNNs and their successors excel at understanding the temporal, ordered nature of sequential data like language and time series. By mastering these key architectures, developers and data scientists can unlock the ability to build a vast array of intelligent applications that can see, hear, and understand the world in truly remarkable ways.